Critical Appraisal - How to evaluate a medical research article

Key message: An evaluation is always done by comparison with a standard.

Science has to be reproducible

Science is new, reproducible and useful knowledge. When scientist plublish new knowledge they must describe with sufficient accuracy how they gained this new knowledge. The relevant

information must be presented clearly and logically to the readership. Usually this is done in a research article published in a scientific journal.

"Critical appraisal of the quality of clinical trials is possible only if the design, conduct, and analysis ... are thoroughly and accurately described in the report." (www.consort-statement.org/Media/Default/Downloads/CONSORT%202010%20Explanation%20and%20Elaboration%20Document-BMJ.pdf, 17.06.21, p. 1)

To improve the reporting (not the quality) of empirical studies guidelines and checklists were developed.

"... Trial reports need be clear, complete, and transparent. Readers, peer reviewers, and editors can also use CONSORT to help them critically appraise and interpret reports of RCTs. However, CONSORT was not meant to be used as a quality assessment instrument. (www.sciencedirect.com/science/article/pii/S0895435610001034, 10.05.21)

"... these recommendations are not prescriptions for designing or conducting studies. Also, while clarity of reporting is a prerequisite to evaluation, the checklist is not an instrument to evaluate the quality of observational research." (www.sciencedirect.com/science/article/pii/S174391911400212X, 10.05.21)

An organization that evaluates the quality of medical research is Cochrane:

"Cochrane Reviews base their findings on the results of studies that meet certain quality criteria, since the most reliable studies will provide the best evidence for making decisions about health care." (www.cochranelibrary.com/about/about-cochrane-reviews , 11.05.21)

Evaluating a study means to determine the extent to which the results of the study are valid. This is very hard to do when the evaluator was not involved in the study. Therefore, it is more reasonable to consider whether the results are at risk of bias than to say with certainty that they are biased.

Bias is not a random but a systematic error, meaning that repeating the studies in the same way will produce the same wrong results. The validity of a research study includes two aspects: internal and external validity. Internal validity is defined as the extent to which the observed results are valid in the population under study and thus are not due to systematic errors. The external validity of a study is the extent to which the results of a study can be transferred to other populations and settings.

"Critical appraisal is the process of carefully and systematically examining research to judge its trustworthiness, and its value and relevance in a particular context. (www.bandolier.org/painres/download/whatis/What_is_critical_appraisal.pdf, 27.05.21)

Is it possible to examine trustworthiness without knowing the original data and how they were obtained? The evaluation of research is a task for professionals (Chochrane uses a handbook for their reviews). But every reader of a scientific article presenting an empirical study can come to her own opinion if she judges the elements of the study.

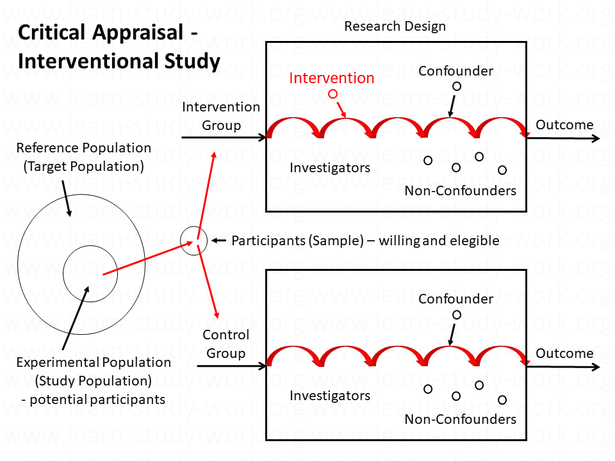

Example "Interventional Study"

The elements of an interventional study are:

- The objective and the research design

- The participants

- The intervention

- The potential confounders

- The outcome

- The study investigators

- The report

1. The Objective and the Research Design

The introduction explains the rational for the study. It explains what is already known about the topic, where a knowledge gap exists, and why this knowledge gap is a problem. The objective of the study is to fill this knowledge gap and thus to help find the solution to the problem.

It makes no sense to set an objective without knowing how it can be achieved. Therefore, the authors must indicate the research design that they used for the study (in the titel, the abstract, the introduction or the method section). The reader should now judge:

- Is the background knowledge adequately described?

- Is the problem clearly explained?

- Is the objective set reasonably?

- Does the research design correspond to the intended objective?

2. The Participants

It is often difficult to find the appropiate participants for a study.

"Recruitment ... begins with

the identification, targeting and enlistment of participants (volunteer patients or controls) for a research study. It involves providing information to the potential participants and generating

their

interest in the proposed study. There are two main goals of recruitment:

• to recruit a sample that adequately represents the target population;

• to recruit sufficient participants to meet the sample size and power requirements of the study (Hulley et al, 2001; Keith, 2001). (Patel MX, Doku V & Tennakoon T (2003) Challenges in

recruitment of research participants. Advances in Psychiatric Treatment 9, p. 229)

If the sample size is too small, the

results of the study can be misleading. A sample size that is too large leads to unnecessary expenditure of effort and finances.

"We cannot expect the results of RCTs Randomised controlled trials ... to be relevant to all patients and all settings (that is not what is meant by external validity) but they should at least be designed and reported in a way that allows clinicians to judge to whom they can reasonably be applied." (Rothwell PM. (2005) External validity of randomised controlled trials: “To whom do the results of this trial apply?” Lancet 365, p. 83)

Patients are selected according to trial eligibility criteria (inclusion and exclusion criteria). A description of the eligibility criteria used to select study participants is necessary to evaluate the study.

The idea of a controlled trial is to compare groups of participants that differ only with respect to the intervention (treatment). Therefore any differences in baseline characteristics between the groups should be the result of chance rather than bias. This is best done by a randomized allocation of the participants to the groups. Random assignment ensures that participants for the treatment and control groups are selected completely at random, without regard to the intent of the investigators or the patients' condition and preferences.

"The response to and/or compliance with a treatment can be

influenced strongly by the doctor-patient relation ship51-53, placebo effect554,55,

and patient preference56-58. Yet trialists rightly try where possible to eliminate any effect of these factors by using blinded treatment allocation, placebo control,

and exclusion of patients or clinicians who have strong treatment preferences." (Rothwell PM. (2005) External validity of randomised controlled trials: “To whom do the

results of this trial apply?” Lancet 365, p. 83)

Allocation concealment avoids researchers being able to influence

which participants are assigned to a particular intervention group. Blinding means that study participants, providers of health-care, investigators, or data collectors are not informed about the

assigned treatment so that they are not influenced by this knowledge.

"Recruitment for a randomised controlled trial is usually more difficult than that for an observational study because the participants must be willing to be assigned randomly and to take or accept treatments to which they may have been blinded. The possibility of receiving a placebo treatment is often a source of concern (Hulley et al, 2001)." (Patel MX, Doku V & Tennakoon T (2003) Challenges in recruitment of research participants. Advances in Psychiatric Treatment 9, p. 231)

Selection bias occurs when certain types of people are more likely to be recruited than others. Attrition occurs when participants leave during a study.

"However, in almost all studies, some

participants leave the study midway for various reasons. In this situation, the participants in each group completing the study cannot be taken as being comparable. In particular, if the dropout

rate is high or is unequal between various arms, the results may be biased." (www.ncbi.nlm.nih.gov/pmc/articles/PMC6801992/,

23.06.21)

" ... bias is expected in the

results when the attrition rate exceeds 20% (Polit & Hungler 1995)." (Gul RB, Ali PA. (2010) Clinical trials: the challenge of recruitment and retention of participants. J Clin Nurs 19, p.

228)

The reader of an interventional study report should judge:

- Is the sample population appropiate to the hypothesis being tested so that the results of the study are generalisable to the target population?

-

Is the allocation of the

participants to the intervention and control group randomizes, concealed and blinded?

- Do the intervention and control group have the same baseline data?

- Is there an attrition bias?

3. The Intervention

"After randomisation there will be two (or more) groups, one of which will receive the test intervention and another (or more) which receives a standard intervention or placebo. Ideally, neither the study subjects, nor anybody performing subsequent measurements and data collection, should be aware of the study group assignment. ... Without effective blinding, if subject assignment is known by the investigator, bias can be introduced because extra attention may be given to the intervention group (intended or otherwise).8 " (J.M. Kendal (2003) Designing a research project: Randomised controlled trials and their principles. Emergency Medicine Journal, 20 , pp. 166)

Performance bias is caused by differences in the treatment of participants in addition to the intervention or by differences in the general conditions of the groups.

"Co-interventions are additional treatments, advice or other interventions that a patient may receive, and which may affect the outcome of interest. ... This is particularly problematic if the usage of such a co-intervention differs between the two treatment arms. To avoid this, the study design may require either expressly asking the trial participants to avoid such co-interventions, or close monitoring of their use so that necessary adjustment can be done during analysis or interpretation of the study results." (www.ncbi.nlm.nih.gov/pmc/articles/PMC6801992/, 22.06.21)

Quality control is particularly important for complex interventions.

"Complex interventions are usually described as interventions that contain several interacting components ... It is therefore important to understand the whole range of effects and how they vary, for example, among recipients or between sites. A second key question ... is how the intervention works: what are the active ingredients and how are they exerting their effect" (www.bmj.com/content/337/bmj.a1655.short, 22.06.21)

"A critical aspect of clinical research is quality control. ... Essentially, quality control issues occur in clinical procedures, measuring outcomes, and handling data. Quality control begins in the design phase of the study when the protocol is being written and is first evaluated in the pilot study ... Once the methods part of the protocol is finalised, an operations manual can be written that specifically defines how to recruit subjects, [perform interventions,] perform measurements, etc. This is essential when there is more than one investigator, as it will standardise the actions of all involved. After allowing all those involved to study the operations manual, there will be the opportunity to train (and subsequently certify) investigators to perform various tasks uniformly." ((J.M. Kendal (2003) Designing a research project: Randomised controlled trials and their principles. Emergency Medicine Journal, 20 , pp. 167)

The way an intervention is performed can reduce external validity.

"External validity can ... be affected if trials have protocols that differ from usual clinical practice." (Rothwell PM. (2005) External validity of randomised controlled trials: “To whom do the results of this trial apply?” Lancet 365, p. 88)

The reader of an interventional study report should judge:

- Is the interventions for each group described with sufficient details to allow replication?

- Are there any co-interventions?

- Are the general conditions the same for all groups?

4. The Potential Confounders

An outcome is affected (influenced) by various (several) factors. The outcome is called the "dependend variable" and the factor that the investigator manipulates is called the "indipendent variable".

The investigator wants to determine how the independent variable (the intervention) affects the dependent variable (the outcome). The investigator therefore wants to eliminate the effects of other factors (variables) on the outcome. These other factors are called "confounding varibles".

"Various baseline characteristics of the subjects recruited should be measured at the stage of initial recruitment into the trial. These will include basic demographic observations, such as name, age, sex, hospital identification, etc, but more importantly should include any important prognostic factors. It will be important at the analysis stage to show that these potential confounding variables are equally distributed between the two groups ... The random assignment of subjects to one or another of two groups (differing only by the intervention to be studied) is the basis for measuring the marginal difference between these groups in the relevant outcome." (J.M. Kendal (2003) Designing a research project: Randomised controlled trials and their principles. Emergency Medicine Journal, 20 , pp. 165 -166)

The reader of an interventional study report should judge:

- Are there unmeasured or unknown confounders not being controlled by true randomization in a study with a large sample size?

5. The Outcome

"Researchers need to decide which outcomes are most important, which are secondary, and how they will deal with multiple outcomes in the analysis. A single primary outcome and a small number of secondary outcomes are the most straightforward for statistical analysis but may not represent the best use of the data or provide an adequate assessment of the success or otherwise of an intervention that has effects across a range of domains. ... Long term follow-up may be needed to determine whether outcomes predicted by interim or surrogate measures do occur or whether short term changes persist." (www.bmj.com/content/337/bmj.a1655.short, 23.06.21)

"Ideally, any outcome measurement taken on a patient should be precise and reproducible; it should not depend on the observer who took the measurement.4 ... However, it is often necessary to use multiple observers, especially in multicentre trials. Training sessions should be arranged to ensure that observers (and their equipment) can produce the same measurements in any given subject." (J.M. Kendal (2003) Designing a research project: Randomised controlled trials and their principles. Emergency Medicine Journal, 20 , pp. 167)

The reader of an interventional study report should judge:

- Are the outcome measures meaningful?

- Are the outcome measures for each group described with sufficient details to allow replication?

6. The Study Investigators

"Certain personal attributes are an asset to any research investigator. These

include being conscientious, having professional integrity and paying

meticulous attention to detail. Good interpersonal skills should include the ability to be respectful, tolerant, tactful and approachable and to show a

caring and compassionate attitude. Experience in health services and familiarity with the specialist field and the target population are

desirable."

(Patel MX, Doku V & Tennakoon T (2003) Challenges in recruitment of research participants.

Advances in Psychiatric Treatment 9, p. 235)

The reader of a study report should judge:

- Are there any competing interests?

- In which journal was the article published?

- How many times has the article been cited by others?

- What other articles have the investigaors published?

7. The Report

The reader of an interventional study report should judge:

- Does the study report meet the requirements of the CONSORT statement?